3. Model Swarms: Collaborative Search to Adapt LLM Experts via Swarm Intelligence

AI Paper By Hand

The paper 𝗠𝗢𝗗𝗘𝗟 𝗦𝗪𝗔𝗥𝗠𝗦: 𝗖𝗢𝗟𝗟𝗔𝗕𝗢𝗥𝗔𝗧𝗜𝗩𝗘 𝗦𝗘𝗔𝗥𝗖𝗛 𝗧𝗢 𝗔𝗗𝗔𝗣𝗧 𝗟𝗟𝗠 𝗘𝗫𝗣𝗘𝗥𝗧𝗦 𝗩𝗜𝗔 𝗦𝗪𝗔𝗥𝗠 𝗜𝗡𝗧𝗘𝗟𝗟𝗜𝗚𝗘𝗡𝗖𝗘 from Google proposes an LLM search algorithm that is based on the idea of collective intelligence guiding individual systems.

The part I found interesting is it doesn't need any supervised fine-tuning data or pre-existing knowledge of the LLM experts and relies solely on collaborative search.

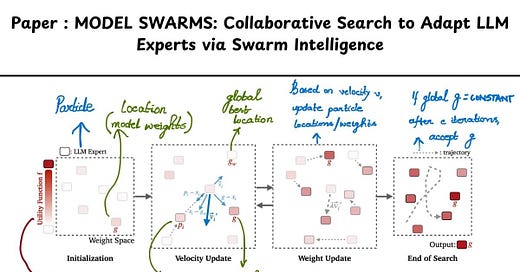

There are four main components for this algorithm:

1. The LLM expert or the "particle" (full model/LoRA adapters)

2. Initial particle location (model weights) and velocity (direction in the weight space)

3. Personal best, global best and global worst locations

4. Utility function that needs to be optimized for model adaptation

The location and velocity of the particles enable the active search of LLM experts (a lot like gradient descent looking for the best direction). And the model checkpoints (personal/global) help keep track of weight space options.

Based on the paper, MODEL SWARMS achieves better performance over four main objectives:

- Single-task (Working with as few as 200 instances)

- Multi-task

- Reward Model

- Human Interest

improving over 12 model composition baselines by up to 21.0% .

Paper : https://arxiv.org/pdf/2410.11163