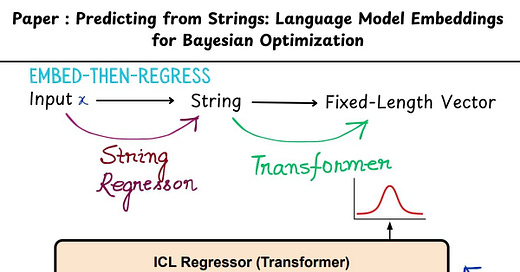

2. Predicting from Strings: Language Model Embeddings for Bayesian Optimization

AI Paper By Hand

A very interesting paper "Predicting from Strings: Language Model Embeddings for Bayesian Optimization" from Google DeepMind proposes to convert inputs to strings using the embedding power of a pre-trained language model.

Core idea: Increase usefulness of the 'search-space' of the problem for general-purpose optimization across various domains.

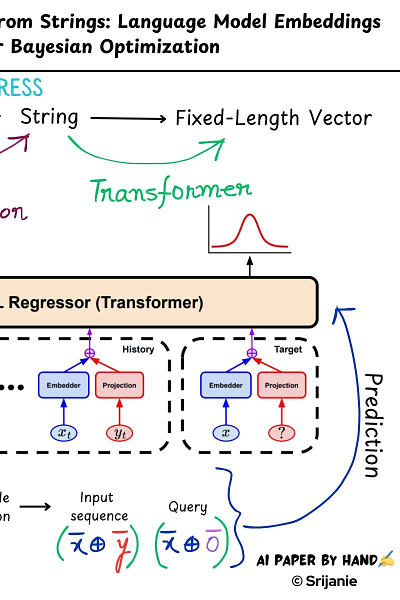

It starts with an input 𝘅 which is converted to a string based on a string-based regressor. The string is then passed through a language model for creating a fixed-length vector embedding.

In the next step, the string embedding along with a trainable projection passes through another transformer which gathers all previous trials and processes the 𝙩+1 output-feature.

The paper calls this technique as "𝗲𝗺𝗯𝗲𝗱-𝘁𝗵𝗲𝗻-𝗿𝗲𝗴𝗿𝗲𝘀𝘀" which brings in a lot of versatility to regressor methods by enabling flexible representation of inputs with raw strings through the use of LLM-based embeddings. Regression using LLM embeddings, how cool is that?! 😀

Paper : https://arxiv.org/pdf/2410.10190