Combine RAG and Monte Carlo Tree Search (MCTS) and we get RuAG, a very interesting approach to instill data into an LLM. Here is a pdf of my annotated version of the paper, hope this helps you grab the central essence of this cool idea! 💡

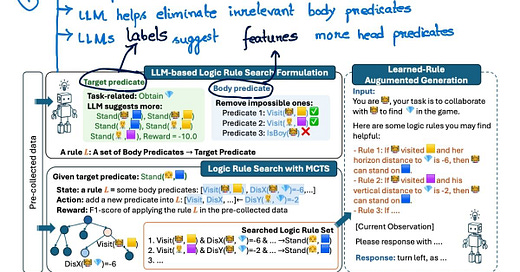

RuAG (learned-rule-augmented generation) is a novel framework that distills large volume of information into logic rules using MCTS which are translated into natural language for seamless augmentation of an LLM's knowledge.

The way RuAG works can be summarized as:

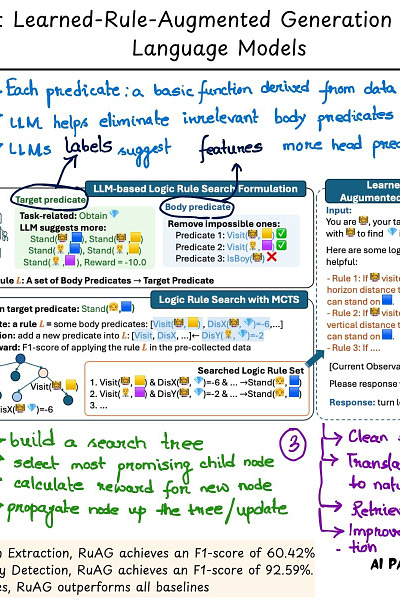

1. It starts with features (body predicate) and labels (target predicate). Target predicate is defined to be task-relevant while the body predicates are initialized as all the data attributions in the dataset. Based on task and description, LLM generates new target predicates and eliminates most irrelevant body predicates.

2. Next, RuAG uses an automated formulation for MCTS to generate set of first-order logic rules. It involves building a search tree and simulating outcomes to estimate the value of actions. To do so, it starts by build a search tree, selects the most promising child node, calculates the reward for the new node and propagates it up the tree.

3. Finally, the search rules are cleaned and translated into natural language which is then used for improved LLM generation.

--------- Results ----------

- For Relation Extraction, RuAG achieves an F1-score of 60.42%

- For Anomaly Detection, RuAG achieves an F1-score of 92.59%.

In both cases, RuAG outperforms all baselines 💡

Paper : https://arxiv.org/abs/2411.03349